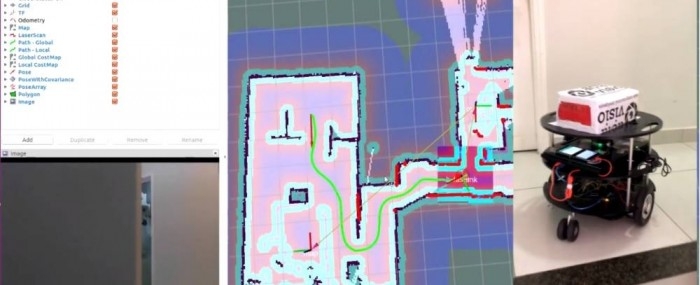

The system knows the warehouse floor plan, so an automated guided vehicle for materials handling can move about safely, according to the program’s developers (image: Acta Visio)

A system of cameras tracks eye movements, processes images and inputs them into a computer, where special software translates the data and sends commands to telemetry-controlled devices.

A system of cameras tracks eye movements, processes images and inputs them into a computer, where special software translates the data and sends commands to telemetry-controlled devices.

The system knows the warehouse floor plan, so an automated guided vehicle for materials handling can move about safely, according to the program’s developers (image: Acta Visio)

By Eduardo Geraque | FAPESP Innovative R&D – With the aim of developing electronic systems that mimic human vision, computer scientists at Acta Visio have created solutions for use in hospitals, factories, and other facilities. A major project now under development by the startup, which is based on Campinas, São Paulo State (Brazil), involves refining an electronic system that enables people with quadriplegia to operate drones or handle external tools such as cameras and claws using only their eyes.

According to co-founder Marcus Lima, Acta Visio has successfully tested the use of a prototype eye-tracking system embedded in first-person view (FPV) goggles to control a drone customized for quadriplegics. The project was supported by FAPESP’s Innovative Research in Small Business Program (PIPE) between May 2017 and April 2018. The next step is to develop the commercial side of the project.

The software developed by Acta Visio’s team of six researchers also runs an app that enables people with physical disabilities to communicate by blinking. “The system we developed is similar to the one used by Stephen Hawking,” Lima says. The late British theoretical physicist had amyotrophic lateral sclerosis (ALS), progressively losing the use of almost all muscles. Hawking died in 2018 at the age of 76.

The app runs on a smartphone, Lima explains. The front camera captures eye commands, and the electronic system generates text that is automatically converted into speech.

The same system can be used to move objects such as drones, he adds. The process is relatively simple and comprises three parts. In the first stage of the operating flow, eye movements are tracked by a camera, and captured images are preprocessed before being sent to a computer. In the second stage, software receives, processes and translates the data and sends commands to the drone. In the third stage, the drone is controlled by a telemetry system that communicates with the computer via Wi-Fi or radio frequency signals. The drone rises and descends, or moves left and right, when the user moves his or her eyes accordingly.

In another project supported by FAPESP’s PIPE program and that is still in the development stage, this camera system will be used inside hospital intensive care units (ICUs) to monitor staff hand hygiene, considered an indispensable part of efforts to reduce hospital-acquired infection rates.

“Today, hospital ICUs enforce proper hand hygiene using human observers. We aim to automate the process with a monitoring system,” Lima says.

The idea is that a sensor next to the wash basin will record staff performing hand hygiene. If the procedure is performed correctly according to the computer, a green light on the individual’s badge will go on. A red light will indicate improper hand hygiene, requiring repetition of the procedure. The system is in the initial stage of development and is being constructed in partnership with Hospital Cajuru in Curitiba, Paraná State.

A giant jigsaw puzzle

“The system with the best commercial performance is one that checks the assembly of large industrial devices or components using cameras,” Lima says. In heavy industry, for example, manufacturers of stamping presses used on automotive vehicle production lines have to assemble giant jigsaw puzzles, large pieces of machinery with many welded parts that must be dovetailed with millimetric precision.

“Our system of cameras, mobile or fixed, is capable of checking all splices, welds and other placements, which it compares with the 3D mechanical design of each part. Any misaligned piece according to the design generates an alert seen by the assembly line operator,” Lima explains. Electronic scanning for flaws in the assembly of parts is performed in four to five hours, compared with up to two days for the conventional manual system.

Acta Visio has another project under way. According to Lima, the idea is to use automated guided vehicles (AGVs) instead of forklifts in large warehouses. The operator’s eyes will be replaced by cameras and presence sensors, which will guide materials-handling vehicles around the facility based on a digital version of the floor plan, ensuring safety and accuracy, according to the developers of the program.

Company: Acta Visio

Site: http://www.acta-visio.com

Republish

The Agency FAPESP licenses news via Creative Commons (CC-BY-NC-ND) so that they can be republished free of charge and in a simple way by other digital or printed vehicles. Agência FAPESP must be credited as the source of the content being republished and the name of the reporter (if any) must be attributed. Using the HMTL button below allows compliance with these rules, detailed in Digital Republishing Policy FAPESP.